❯❯

For more on the procedures that researchers use to ensure external validity, see Chapter 7, pp. 186–191.

You now have the tools to differentiate the three major claims you’ll encounter in research journals and the popular media—but your job is just beginning. Once you identify the kind of claim a writer is making, you need to ask targeted questions as a critically minded consumer of information. The rest of this chapter will sharpen your ability to evaluate the claims you come across, using what we’ll call the four big validities: construct validity, external validity, statistical validity, and internal validity (Cook & Campbell, 1979; Shadish et al., 2002). Validity refers to the appropriateness of a conclusion or decision, and in general, a valid claim is reasonable, accurate, and justifiable. In psychological research, however, we do not say a claim is simply “valid.” Instead, psychologists specify which of the validities they are applying. As a psychology student, you will learn to pause before you declare a study to be “valid” or “not valid” and to specify which of the four big validities the study has achieved.

Although the focus for now is on how to evaluate other people’s claims in terms of the four big validities, you’ll also be using this same framework if you plan to conduct your own research. Whether you decide to test a frequency claim, an association claim, or a causal claim, it is essential to plan your research carefully, emphasizing the validities that are most important for your goals.

To evaluate how well a study supports a frequency claim, you will focus on two of the big validities: construct validity and external validity. You may decide to ask about statistical validity, too.

Construct validity refers to how well a conceptual variable is operationalized. When you ask how well a study measured or manipulated a variable, you are interrogating the construct validity—be it smiling, smoking, texting, gender identity, food insecurity, or knowing when news is fake. For example, when evaluating the construct validity of a frequency claim, the question is how well the researchers measured their variable of interest. Consider this claim: “39% of teens text while driving.” There are several ways to measure this variable, though some are better than others. You could ask teenagers to tell you on an online survey how often they engage in text messaging while they’re behind the wheel. You could stand near an intersection and record the behaviors of teenage drivers. You could even use cell phone records to see if a text was sent at the same time a person was known to be driving. You would expect the study behind this claim to use an accurate measure of texting among teenagers, and observing behavior is probably a better way than casually asking, “Have you ever texted while driving?”

To ensure construct validity, researchers must establish that each variable has been measured reliably (meaning the measure yields similar scores on repeated testings) and that different levels of a variable accurately correspond to true differences in, say, texting or happiness. (For more detail on construct validity, see Chapter 5.)

The next important questions to ask about frequency claims concern generalizability: How did the researchers choose the study’s participants, and how well do those participants represent the intended population? Consider the example “74% of the world smiled yesterday.” Did Gallup researchers survey every one of the world’s 8 billion people to come up with this number? Of course not. They surveyed a smaller sample of people. Next you ask: Which people did they survey, and how did they choose their participants? Did they include only people in major urban areas? Did they ask only college students from each country? Or did they attempt to randomly select people from every region of the world?

❯❯

For more on the procedures that researchers use to ensure external validity, see Chapter 7, pp. 186–191.

Such questions address the study’s external validity—how well the results of a study generalize to, or represent, people or contexts besides those in the original study. If Gallup researchers had simply asked people who clicked on the Gallup website whether they smiled yesterday, and 74% of them said they did, the researcher cannot claim that 74% of the entire world did. The researcher cannot even argue that 74% of Gallup website visitors smiled or laughed because the people who choose to answer such questions may not be an accurate representation. Indeed, to claim the 74% number, the researchers would have needed to ensure that the participants in the sample adequately represented all people in the world—a daunting task! Gallup’s Global Emotions Report states that their sample includes adults in each of 140 countries who were interviewed by phone or in person (Gallup.com, 2019). The researchers attempted to obtain representative samples in each country (excluding very remote or politically unstable areas of certain countries).

Researchers use statistics to analyze their data. Statistical validity, also called statistical conclusion validity, is the extent to which a study’s statistical conclusions are precise, reasonable and replicable. How well do the numbers support the claim?

To understand statistical validity, it helps to know that the value we get from a single study is not an objective truth. Instead, it’s an estimate of that value in some population. For example, for the report claiming that “39% of teenagers text while driving,” researchers interviewed a sample of about 9,000 teen drivers to estimate the behavior of the population of all U.S. teenage drivers. To evaluate statistical validity, we start with the point estimate. In a frequency claim, the point estimate is usually a percentage.

Next we ask about the precision of that estimate. For a frequency claim, precision is captured by the confidence interval, or margin of error of the estimate. The confidence interval is a range designed to include the true population value a high proportion of the time. In the report about how many teenagers text while driving, the 39.2% point estimate was accompanied by the confidence interval, 37.0–41.4. This means that the true number of teens who text while driving may be as low as 37% or as high as 41.4%, though our interval might miss the true value (CDC, n.d.). An analogy for the confidence interval is a contractor who estimates your home repair will cost “between $1,000 and $1,500.” The repair will probably cost something in that range (but might cost less or cost more).

Finally, statistical validity improves with multiple estimates. Researchers ideally conduct studies more than once and then consider the results of all investigations of the same topic. Combining many estimates is better than using a single one. We should consider each study in the context of multiple results on the same question, collected over the long run.

Correlational studies, which support association claims, measure two variables instead of one. Such studies describe how these variables are related to each other. To interrogate an association claim, you ask how well the correlational study behind the claim supports construct, external, and statistical validities.

To support an association claim, a researcher measures two variables, so you have to assess the construct validity of each variable. For the headline “Study links coffee consumption to lower depression in women,” you should ask how well the researchers measured coffee consumption and how well they measured depression. The first variable, coffee consumption, could be measured by asking people to document their food and drink intake every day for some period of time. The second variable, depression, could be measured using a series of questions developed by clinical psychologists that ask about depression symptoms.

In any study, measuring variables is a fundamental strength or weakness—and construct validity questions assess how well such measurements were conducted. If you conclude that one of the variables was measured poorly, you would not be able to trust the study’s conclusions. However, if you conclude that the construct validity of both variables was excellent, you can have more confidence in the association claim being reported.

You might also interrogate the external validity of an association claim by asking whether it can generalize to other populations, as well as to other contexts, times, or places. For example, the association between coffee consumption and depression came from a study of women. Will the association generalize to men? You can also evaluate generalizability to other contexts by asking, for example, whether the link between coffee consumption and depression might be generalizable to other forms of caffeine (such as tea or cola). Similarly, if a study found a link between exercise and income, you can ask whether the study, conducted on Americans, can generalize to people in Canada, Mexico, or Japan. Table 3.5 summarizes the four big validities used in this text.

The Four Big Validities

|

Type of validity |

Description |

|

Construct validity |

How well the variables in a study are measured or manipulated. The extent to which the operational variables in a study are a good approximation of the conceptual variables. |

|

External validity |

The extent to which the results of a study generalize to some larger population (e.g., whether the results from this sample of teenagers apply to all U.S. teens), as well as to other times or situations (e.g., whether the results based on coffee apply to other types of caffeine). |

|

Statistical validity |

How well the numbers support the claim—that is, how strong the effect is and the precision of the estimate (the confidence interval). Also takes into account whether the study has been replicated. |

|

Internal validity |

In a relationship between one variable (A) and another (B), the extent to which A, rather than some other variable (C), is responsible for changes in B. |

When applied to an association claim, statistical validity considers how strong the estimated association is and how precise that estimate is, and it considers other estimates of the same association.

The first aspect of the statistical validity of an association is strength: How strong is the estimated association? Some associations—such as the association between education and income—are quite strong. People with bachelor’s degrees usually earn much more money than those with high school degrees—about 66% more income over a 40-year career (The College Board, n.d., based on data from the U.S. Census Bureau). Other associations—such as the association between exercise and income—are weaker. The study on exercise and income found that frequent exercisers earn about 9% more money than others (Kosteas, 2012).

We can also ask about the precision of the estimated association. The estimate of an association (e.g., 9% higher income for frequent exercisers) can be accompanied by a confidence interval, just as it can for frequency claims. We might read for example, that frequent exercisers earn “between 6% and 12%” more income than others. The confidence interval is designed to capture the true relationship between exercising and income in a high proportion of cases. Studies with smaller samples (fewer people) have wider, less precise intervals that reflect uncertainty (e.g., 1% to 25%). Studies with larger samples have narrower, more precise intervals (e.g., 8% to 10%). Finally, we ask whether the study has been conducted more than once, because multiple estimates of the association are better than one.

❯❯

For more about association strength and statistical significance, see Chapter 8, pp. 205–206 and pp. 213–214.

As you might imagine, evaluating statistical validity can be complicated. Full training in how to interrogate statistical validity requires a separate, semester-long statistics class. This book introduces you to the basics and focuses on the strength of the estimated effect, the precision of that estimate (its confidence interval), and replication over the long run.

In sum, when you come across an association claim, you should ask about three validities: construct, external, and statistical. You can ask how well the two variables were measured (construct validity). You can ask whether you can generalize the result to a population (external validity). And you can estimate the strength of the association and the precision of this estimate (statistical validity).

Table 3.6 gives an overview of the three claims, four validities framework. Before reading about how to interrogate causal claims, use the table to review what we’ve covered so far.

Interrogating the Three Types of Claims Using the Four Big Validities

|

Type of validity |

Frequency claims (“4 in 10 teens admit to texting while driving”) |

Association claims (“study links exercise to higher pay”) |

Causal claims (“pretending to be batman helps kids stay on task”) |

|

Usually based on a survey or poll, but can come from other types of studies |

Usually supported by a correlational study |

Must be supported by an experimental study |

|

|

Construct validity |

How well has the researcher measured the variable in question? |

How well has the researcher measured each of the two variables in the association? |

How well has the researcher measured or manipulated the variables in the study? |

|

Statistical validity |

What is the confidence interval (margin of error) of the estimate? Are there other estimates of the same percentage? |

What is the estimated effect size: How strong is the association? How precise is the estimate: What is the confidence interval? What do estimates from other studies say? |

What is the estimated effect size: How large is the difference between groups? How precise is the estimate: What is the confidence interval? What do estimates from other studies say? |

|

Internal validity |

Frequency claims do not usually assert causality, so internal validity is not relevant. |

People who make association claims are not asserting causality, so internal validity is not relevant. A writer should avoid making a causal claim from a simple association, however (see Chapter 8). |

Was the study an experiment? Does the study achieve temporal precedence? Does the study control for alternative explanations by randomly assigning participants to groups? Does the study avoid internal validity threats (see Chapters 10 and 11)? |

|

External validity |

To what populations, settings, and times can we generalize this estimate? How representative is the sample? Was it a random sample? |

To what populations, settings, and times can we generalize this association claim? How representative is the sample? To what other situations might the association be generalized? |

To what populations, settings, and times can we generalize this causal claim? How representative is the sample? How representative are the manipulations and measures? |

An association claim says that two variables are related. A causal claim goes further, saying that one variable causes the other. Instead of using such verb phrases as is associated with, is related to, and is linked to, causal claims use directional verbs such as affects, leads to, and reduces. When you interrogate such a claim, your first step will be to make sure it is backed up by research that fulfills the three criteria for causation: covariance, temporal precedence, and internal validity.

Of course, one variable usually cannot be said to cause another variable unless the two are related. Covariance, the extent to which two variables are observed to go together, is established by the results of a study. It is the first criterion a study must satisfy in order to establish a causal claim. But showing that two variables are associated is not enough to justify using a causal verb. The research method must also satisfy two additional criteria: temporal precedence and internal validity (Table 3.7).

To say that a study establishes temporal precedence means that the method was designed so that the causal variable clearly comes first in time, before the effect variable. To make the claim “Pretending to be Batman helps kids stay on task,” a study must show that “pretending to be Batman” came first and staying on task came later. Although this statement might seem obvious, it is not always so. Consider the claim “Pressure to be available 24/7 on social media causes teen anxiety.” It might be the case that social media behavior came first, causing anxiety, but it is also possible that teens start out anxious, and that anxiety spreads to their social media behavior. Therefore, to support the causal claim, a study needs to establish that social media behavior came first and the anxiety came later.

Three Criteria for Establishing Causation Between Variable A and Variable B

|

Criterion |

Definition |

|

Covariance |

The study’s results show that as A changes, B changes; e.g., high levels of A go with high levels of B, and low levels of A go with low levels of B. |

|

Temporal precedence |

The study’s method ensures that A comes first in time, before B. |

|

Internal validity |

The study’s method ensures that there are no plausible alternative explanations for the change in B; A is the only thing that changed. |

Another criterion, called internal validity, or the third-variable criterion, refers to a study’s ability to eliminate alternative explanations for the association. For example, to say “Pretending to be Batman helps kids stay on task” is to claim that pretending to be a hardworking hero like Batman causes increased persistence. But an alternative explanation could be that older kids are more likely to pretend to be hardworking heroes and are better able to stay on task. In other words, there could be an internal validity problem. The maturity of the child, not pretending to be Batman, leads children to persist longer.

❯❯

For examples of independent and dependent variables, see Chapter 10, pp. 281–283.

Usually, to support a causal claim, researchers must conduct a well-designed experiment, in which one variable is manipulated and the other is measured. Experiments are considered the gold standard of psychological research because of their potential to support causal claims. In daily life, people tend to use the word experiment casually, referring to any trial of something to see what happens (“Let’s experiment and try making the popcorn with olive oil instead”). In science, including psychology, an experiment is more than just “a study.” When psychologists conduct an experiment, they manipulate the variable they think is the cause and measure the variable they think is the effect (or outcome). In the context of an experiment, the manipulated variable is called the independent variable and the measured variable is called the dependent variable. To support the claim about Batman, the researchers in that study would have had to manipulate the variable “pretending to be Batman” and measure the persistence variable.

Remember: To manipulate a variable means to assign participants to be at one level or the other. In the study that tested the persistence claim, researcher Rachel White and five research colleagues (2017) recruited 90 typically developing 6-year-old children. They asked all 90 kids to do a slow-paced, boring computer task. The kids were told to work as hard as they could on the boring task, but they could take breaks to play an enticing game on an iPad. (This was how the researchers operationalized “staying on task” in their study.)

The study was meant to test whether kids would stay on the computer task longer if they took a self-distanced perspective. Self-distancing means taking an outsider’s view of your own behavior, like being a fly on the wall. White and colleagues manipulated the self-distancing variable by putting kids in one of three groups. Children in one group were encouraged to self-distance by pretending to be a hardworking hero—such as Batman, Bob the Builder, or Dora the Explorer—and were given a costume to wear. The researchers told these children to ask themselves as they worked on the boring task, “Is [Batman/Bob/Dora] working hard?”

A second group of children was told to think of themselves from a third-person perspective, asking “Is [child’s name] working hard?” A third group of children was called self-immersed—the condition lowest in self-distancing. They were told to ask the first-person question, “Am I working hard?”

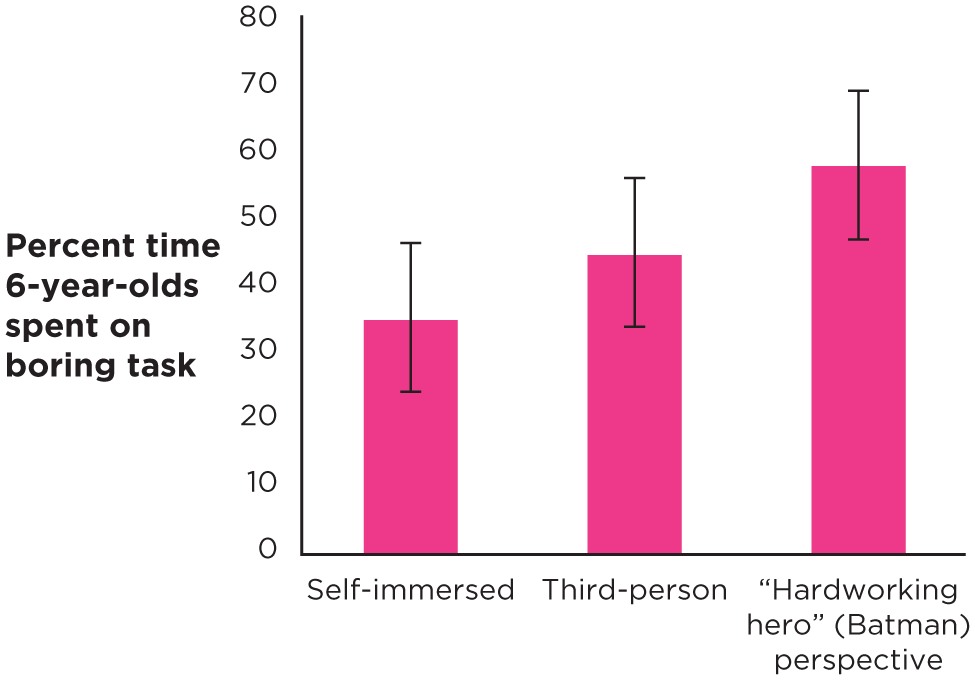

At the conclusion of the study, White and colleagues found that the 6-year-olds who were in the hardworking hero condition spent almost 60% of their time on the boring task (and only 40% on the iPad). Children in the third-person condition spent 45% of their time on the boring task. Children in the self-immersed condition spent only 35% of their time on the boring task (Figure 3.4). The results of White et al.’s study showed covariance: Being in the Batman condition covaried with persisting longer on the boring task.

Figure 3.4

Interrogating a causal claim.

What features of White et al.’s study made it possible to support the causal claim that pretending to be Batman causes kids to persist longer at a boring task? (The error bars represent the standard error of the estimate. Source: White et al., 2017.)

A Study’s Method Can Establish Temporal Precedence and Internal Validity. Why does the method of manipulating one variable and measuring the other help scientists make causal claims? For one thing, manipulating the independent variable—the causal variable—ensures that it comes first. By manipulating the children’s perspective first and then measuring persistence, White and colleagues ensured temporal precedence in their study.

In addition, when researchers manipulate a variable, they have the potential to control for alternative explanations; that is, they can ensure internal validity. When the White team were investigating children’s persistence, they ensured that the children in all three conditions were about the same age, because otherwise age would have been an alternative explanation for the results. They also didn’t want the children in the Batman condition to be more intelligent, more introverted, or have different kinds of parents than children in the other two conditions, because these could also be alternative explanations.

❯❯

For more on how random assignment helps ensure that experimental groups are similar, see Chapter 10, pp. 290–291.

Therefore, White and her colleagues used a technique called random assignment to ensure that the children in all the groups were as similar as possible. They used a method, such as rolling a die, to decide whether each child in the study would follow the hardworking hero, third-person, or self-immersed instructions. By randomly assigning children to one of the groups these researchers could ensure that the children in the Batman condition were as similar as possible, in every other way, to those in the third-person or self-immersed conditions. Random assignment increased internal validity by allowing the researchers to control for potential alternative explanations. They also designed the experiment so the children were all the same age, were all tested in the same laboratory room, worked on the same boring game, and had the same attractive iPad distractor. These methodology choices secured the study’s internal validity.

The White team’s experiment met all three criteria for causation. The results showed covariance, and the method established temporal precedence and internal validity. Therefore, the researchers were justified in making a causal claim from their data. Their study really can be used to support the claim that “pretending to be Batman helps kids stay on task.”

Let’s use two other examples to illustrate how to interrogate causal claims made by writers and journalists.

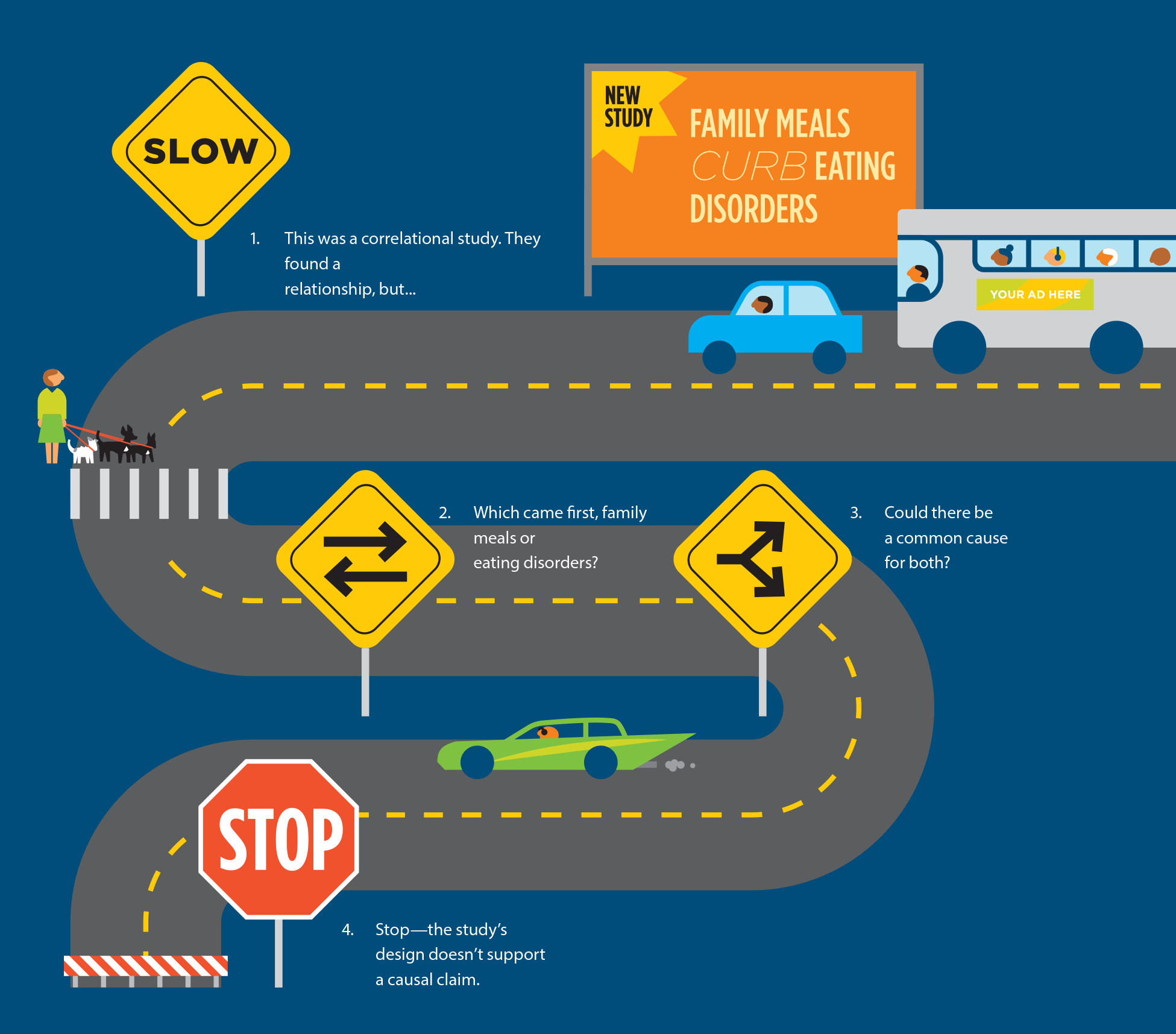

Do Family Meals Really Curb Eating Disorders? To interrogate the causal claim “Family meals curb teen eating disorders” ( Infographic Figure 3.5 ), we start by asking about covariance in the study behind this claim. Is there an association between family meals and eating disorders? Yes: The news report says 26% of girls who ate with their families fewer than five times a week had eating-disordered behavior (e.g., the use of laxatives or diuretics, or self-induced vomiting), and only 17% of girls who ate with their families five or more times a week engaged in eating-disordered behaviors (Warner, 2008). The two variables are associated.

Navigating Causal Claims: Do Family Meals Really Curb Eating Disorders?

Journalists might make a causal claim from a correlational study. But correlational studies don’t establish temporal precedence or internal validity.

What about temporal precedence? Did the researchers make sure family meals had increased before the eating disorders decreased? No: In fact both variables were measured at the same time, so the temporal precedence is not clear from this association. In fact, one of the symptoms of an eating disorder is embarrassment about eating in front of others, so perhaps the eating disorder came first and the decreased family meals came second. Daughters with eating disorders may simply find excuses to avoid eating with their families.

Internal validity is a problem here, too. Without an experiment, we cannot rule out many alternative, third-variable explanations. Perhaps girls from dual-earner families are less likely to eat with their families and are more vulnerable to eating disorders, whereas girls who have only one working parent are less vulnerable. Maybe academically high-achieving girls are too busy to eat with their families and are also more susceptible to eating-disordered behavior. These are just two possible alternative explanations. Only a well-run experiment could have controlled for these internal validity problems (the alternative explanations), using random assignment to ensure that the girls who had frequent family dinners and those who had less frequent family dinners were comparable in all other ways: dual- versus single-income households, high versus low scholastic achievement, and so on. However, it would be impractical and probably unethical to conduct such an experiment.

Although the study’s authors reported the findings appropriately, the journalist wrapped the study’s results in an eye-catching causal conclusion by saying that family dinners curb eating disorders. The journalist should probably have headlined the study with the association claim, “Family dinners are linked to eating disorders.”

Does Social Media Pressure Cause Teen Anxiety? Another example of a dubious causal claim is this headline: “Pressure to be available 24/7 on social media causes teen anxiety.” In the story, the journalist reported on a study that measured two variables in a set of teenagers. One variable was social media use (especially the degree of pressure to respond to texts and posts), and the other was level of anxiety (ScienceDaily, 2015). The researchers found that those who felt pressure to respond immediately also had higher levels of anxiety.

Let’s see if this study’s design is adequate to support the journalist’s conclusion—that social media pressure causes anxiety in teenagers. The study certainly does have covariance: The results showed that teens who felt more pressure to respond immediately to social media were also more anxious. However, this was a correlational study, in which both variables were measured at the same time, so temporal precedence was not established. We cannot know if the pressure to respond to social media increased first, thereby leading to increased anxiety, or if teens who were already anxious expressed their anxiety through social media use, by putting pressure on themselves to respond immediately.

In addition, this study did not rule out possible alternative explanations (internal validity) because it was not an experiment. Several outside variables could potentially correlate with both anxiety and responding immediately to social media. One might be that teens who are involved in athletics are more relaxed (because exercise can reduce anxiety) and less engaged in social media (because busy schedules limit their time). Another might be that certain teenagers are vulnerable to emotional disorders in general. They are already more anxious and feel more pressure about the image they’re presenting on social media (Figure 3.6).

Figure 3.6

Support for a causal claim?

Without conducting an experiment, researchers cannot support the claim that social media pressure causes teen anxiety.

An experiment could potentially rule out such alternative explanations. In this example, though, conducting an experiment would be hard. A researcher cannot randomly assign teens to be concerned about social media or to be anxious. Because the research was not enough to support a causal claim, the journalist should have packaged the description of the study under an association claim headline: “Social media pressure and teen anxiety are linked.”

A study can support a causal claim only if the results demonstrate covariance, and only if it used the experimental method, thereby establishing temporal precedence and internal validity. Therefore, internal validity is often the most important validity to evaluate for causal claims. Besides internal validity, the other three validities discussed in this chapter—construct validity, statistical validity, and external validity—should be interrogated, too.

❮❮

For more on how researchers use data to check the construct validity of their manipulations, see Chapter 10, pp. 303–305.

Construct Validity of Causal Claims. Take the headline “Pretending to be Batman helps kids stay on task.” First, we could ask about the construct validity of the measured variable in this study. How well was “staying on task” measured? Is persistence at the slow, boring computer task an acceptable measure of this variable? Then we would need to interrogate the construct validity of the manipulated variable. In operationalizing manipulated variables, researchers must create a specific task or situation that will represent each level of the variable. In the current example, was the costume and the question “Is Batman working hard?” the best manipulation of the construct, “pretending to be a hardworking hero”?

External Validity of Causal Claims. We could ask, in addition, about external validity. The study tested 6-year-old children from Minneapolis, Minnesota. Can this sample generalize to children from other states or other countries? Would it generalize to younger kids? What about generalization to other situations—could the results generalize to other targets, such as pretending to be an admired teacher? (In Chapters 10 and 14, you’ll learn more about how to evaluate the external validity of experiments and other studies.)

❯❯

For more on determining the strength of a relationship between variables, see Statistics Review: Descriptive Statistics, pp. 468–472.

Statistical Validity of Causal Claims. We can also interrogate statistical validity. To start, we would ask: How large was the difference between the groups? In this example, participants in the Batman condition persisted about 60% of the time, compared with 35% of the time for those in the self-immersed condition—nearly twice as long. That seems like a fairly large effect. Next, we can ask about the precision of this estimate. The error bars in Figure 3.4 show the precision of each percentage estimate (the confidence intervals)—these intervals are somewhat wide, reflecting the relatively small sample size. We can also ask whether this study has been repeated—whether we can consider estimates from multiple studies over time. (In Chapter 10, you’ll learn more about interrogating the statistical validity of causal claims.)

Which of the four validities is the most important? It depends. When researchers plan studies, they usually find it impossible to conduct a study that satisfies all four validities at once. Depending on their goals, sometimes researchers place a lower priority on certain validities. They decide where their emphasis lies—and so will you, when you participate in producing your own research.

External validity, for instance, is not always possible to achieve—and sometimes it may not be the researcher’s priority. As you’ll learn in Chapter 7, to be able to generalize results from a sample to a wide population requires a representative sample from that population. Consider the study on children’s persistence at a task. Because the researchers were planning to test a causal claim, they wanted to emphasize internal validity so they focused on making the children in the three groups—self-immersed, third-person, and hardworking hero—absolutely equivalent. They were not prioritizing external validity and did not try to sample children from all over the country. However, the study is still important and interesting because it used an internally valid experimental method, even though it did not achieve external validity. Furthermore, although they used a sample of children from Minneapolis, there may be no theoretical reason to assume that the hardworking hero instructions would not improve the persistence of rural children, too. Future research could confirm that the intervention works for other groups of children just as well, but there is no obvious reason to expect otherwise.

In contrast, if some researchers were conducting a telephone survey and did want to generalize its results to, say, the entire Canadian population—to maximize external validity—they would have to randomly select Canadians from all ten provinces. One approach would be to use a random-digit telephone dialing system to call people in their homes, but this technology is expensive. When researchers do use formal, randomly sampled polls, they often have to pay a polling company a fee to administer each question. Therefore, a researcher who wants to evaluate, say, depression levels in a large population may be economically forced to use a short questionnaire or survey. A 2-item measure might not be as good as a 15-item measure, but the longer one would cost more. In this example, the researcher might sacrifice some construct validity in order to achieve external validity.

You’ll learn more about these priorities in Chapter 14. The point, for now, is simply that in the course of planning and conducting a study, researchers weigh the pros and cons of methodology choices and decide which validities are most important. When you read about a study, you should not necessarily conclude it is faulty just because it did not meet one of the validities.